OpenAI is accelerating its efforts to dominate the nascent AI market as this week the company revealed the newest and most powerful model it has developed to date, called GPT-4o. This new large language model (LLM) is considered multimodal as it can both understand and generate content from and to text, images, and audio at unprecedented speed.

The launch represents OpenAI’s biggest technical leap since the release of GPT-4 last year and ChatGPT in late 2022. GPT-4o promises to supercharge OpenAI’s popular AI chatbot and open up entirely new frontiers for more natural and multimodal interactions between humans and AI systems.

“GPT-4o reasons across voice, text and vision”, said OpenAI’s Chief Technology Officer, Mira Murati, when presenting the firm’s new product. “This is incredibly important, because we’re looking at the future of interaction between ourselves and machines.”

OpenAI Showcases GPT-4o’s Powerful Features During a Live Demo

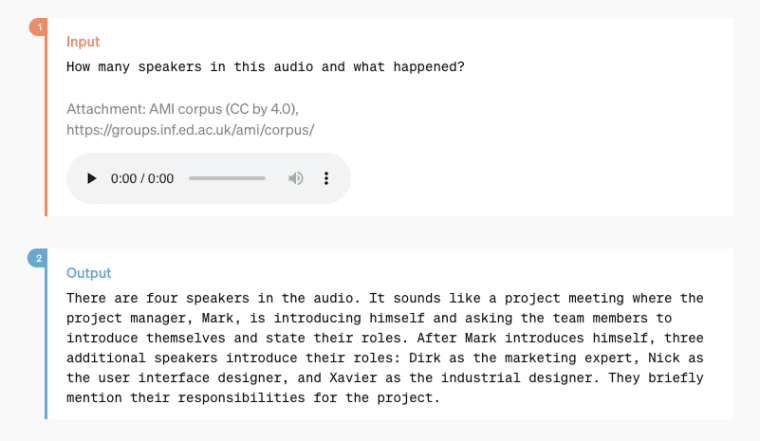

During a live demo in San Francisco, Murati and other OpenAI researchers showcased GPT-4o’s speed and fluency across multiple types of communication. The model can listen to voice prompts and respond with a natural voice in just 232 milliseconds on average. This is the closest speed that an AI model has reached compared to the 200ms average response times of humans.

It can analyze images and video in real-time, translate menus written in a foreign language, provide commentary on live sports action, and go through technical schematics. GPT-4o can also generate outputs in different formats including text, images, and more.

What’s more appealing, GPT-4o unifies all of these capabilities into a single neural network rather than relying on separate specialized models for each modality. This major change in the model’s architecture eliminates lag and enables seamless multimodal exchanges between humans and the AI assistant.

“When you have three different models that work together, you introduce a lot of latency in the experience, and it breaks the immersion of the experience,” Murati emphasized.

She added: “But when you have one model that natively reasons across audio, text and vision, then you cut all of the latency out and you can interact with ChatGPT more like we’re interacting now.”

The company has already begun rolling out GPT-4o’s text and image understanding capabilities to paid subscribers of ChatGPT Plus and enterprise customers. Meanwhile, voice interactions powered by the new model will begin alpha testing with Plus users in the coming weeks.

“The new voice (and video) mode is the best computer interface I’ve ever used,” commented Sam Altman, the Chief Executive Officer of OpenAI.

He added: “It feels like AI from the movies; and it’s still a bit surprising to me that it’s real. Getting to human-level response times and expressiveness turns out to be a big change.”

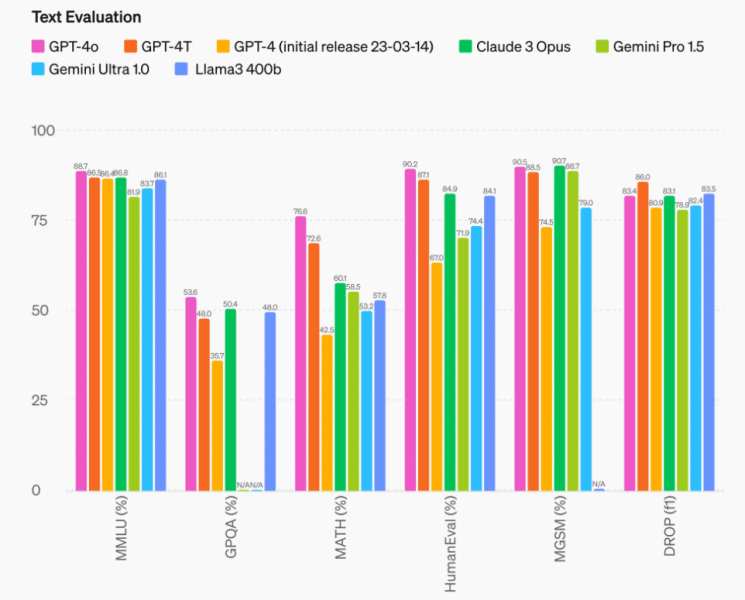

GPT-4o vs Prior Models: Major Upgrades

While OpenAI has allowed multimodal inputs like images with prior versions of ChatGPT, the original GPT-4 released in March 2023 was still primarily a text model. Users could share visual information but the responses they got from the model were still text-based outputs.

GPT-4 represented a major leap over GPT-3.5 as it featured improved factual knowledge, reasoning abilities, and multilingual support across 20+ languages. It scored in the 90th percentile on the notoriously difficult US bar exam and displayed coding skills that could easily rival most human programmers.

Now, GPT-4o builds on those textual talents while also making giant strides in audio and visual understanding that prior models lacked. Here’s a summary of the most relevant differences and improvements that come with this new model release.

Real-Time Voice Interactions Featuring Human-Like Tone Adjustments

Perhaps GPT-4o’s most striking new capability is its ability to engage in real-time voice conversations that are almost indistinguishable from talking to another person. In the demo, the AI responded to verbal prompts with fluent spoken replies in a human voice. The model was also capable of changing tones from silly to professional depending on the context of the conversation.

GPT-4o is equipped to analyze the emotions conveyed by a speaker’s vocal inflections and change its own manner of speaking accordingly. At one point, it even uttered a song by request when asked to tell a bedtime story. This real-time responsiveness represents a significant improvement compared to Voice Mode, the initial audio-based feature launched by OpenAI for ChatGPT.

The alpha version of Voice Mode simply transcribed voice inputs to text, passed them through the GPT language model, and then converted the text outputs to speech. This resulted in higher latency compared to GPT-4o’s fully streamlined pipeline.

Multilingual Support

While GPT-4 represented a big leap for OpenAI in handling inputs across over 20 languages, GPT-4o is now supporting a total of around 50 languages across text and speech. This expands the range of potential applications for the AI model including real-time translation services.

Also read: The History of OpenAI: Sam Altman Pioneering AI Without Musk

During the demo, Murati managed to hold a conversation with the AI model despite switching between English and Italian prompts. She got fluent translated responses in the opposite language powered by the model’s enhanced linguistic skills.

Multimodal Inputs and Outputs

One of the most versatile aspects of GPT-4o is its ability to understand combinations of text, images, and audio all at once as inputs and then generate the desired output in the format that the user prefers.

For example, the AI can accept a photo of a written document and then generate a spoken summary that highlights its key details. Meanwhile, it could watch a video of someone working through a coding problem and provide explanatory comments in writing about the programming logic, mistakes, and suggested remedies.

This multimodal flexibility opens up major possibilities across numerous industries and applications beyond ChatGPT itself in areas like education, creative media production, data visualization, and more.

Low Latency and Cost

Despite its significantly more complex multi-modal architecture, OpenAI claims that GPT-4o will actually be faster and cheaper to run compared to GPT-4. The new model delivers twice the performance at half the infrastructure cost of its predecessor.

This improvement in the model’s efficiency comes from a unified multimodal neural network that avoids separating the pipelines involved in processing different data formats. OpenAI is already rolling out GPT-4o to its API to enable developers and enterprises to tap into this low-latency, multimodal AI solution at a lower operational cost.

Apple and Google May Reveal Their Own Advancements in the AI Field This Week

While OpenAI has raised the bar yet again among AI labs with the release GPT-4o, the arms race to develop the most powerful models is still raging on. This week, Google is also expected to provide updates to its own multimodal AI called Gemini during the annual Google I/O developer conference.

Meanwhile, Apple is likely almost ready to share its own new breakthroughs during its Worldwide Developers Conference that will be taking place on June 5th. Moreover, smaller players like Anthropic have kept pushing the boundaries of what’s possible with generative AI.

Recently, Anthropic, the developer of the popular constitutional AI model Claude, announced that their solution will now be available to users in the European Union and to iOS users via a new app that is now available in the popular Apple App Store mobile marketplace.

However, for OpenAI and its top backer Microsoft (MSFT), GPT-4o represents another big leap that affirms their dominance in this highly-competed realm. At this pace, we may only be months away from witnessing the first artificial general intelligence (AGI) or something resembling it at least.

One additional step that needs to be taken to achieve this major milestone would involve the integration of video-processing capabilities. The release of Sora back in February this year marked an important step forward in this direction.

“We know that these models are getting more and more complex, but we want the experience of interaction to actually become more natural, easy, and for you not to focus on the UI at all, but just focus on the collaboration with ChatGPT,” Murati highlighted.

“For the past couple of years, we’ve been very focused on improving the intelligence of these models … But this is the first time that we are really making a huge step forward when it comes to the ease of use.”, she added.

What seems strikingly evident is that the technology showcased this week by OpenAI is bringing us several steps closer to making the AGI vision a not-so-distant reality.